Playing with ChatGPT

Large Language Models – or LLMs – have been in the news for well over a year now as people explore various ways these sorts of tools can be used in any number of situations. Like many people, I've dabbled with some of these as well. The locally-run models are interesting and terribly slow while the public services are rapid and most certainly not free1.

Last year I was curious to see whether this technology could be used at the day job in order to provide a more complete description of student development in the written sections of progress reports. This is generally an area where specificity is useful, but the time needed to write a detailed analysis of someone's growth is often greater than the amount of time that teachers have available. The idea that I wanted to test was this: given we already know a person's attendance, participation, past progress scores, and material coverage, can a 400-word summary be provided to the teacher ahead of time? This would allow them to read through the feedback and make edits where appropriate, while ensuring the students have realistic, evidence-based feedback to help them with future studies.

After a couple of days worth of effort, it was clear that there were two LLMs capable of delivering the core of what I was looking for. One even had the added benefit of being able to translate across multiple languages, making it a clear winner. I delivered my findings to senior management at the day job who agreed that it was an interesting area of study, but they decided to not pursue any sort of automated feedback system just yet. The one or two vague sentences from the bulk of our over-worked teachers was clearly sufficient in their eyes, so I set my investigations of LLMs aside given that none of my personal computers really had the power to use them in a realistic fashion2.

However, as LLMs, image generators, and video creation engines become more powerful, this isn't something that makes sense to completely ignore. There are a number of areas where these sorts of tools would be incredibly useful, particularly the Large Language Models which can be trained on the writings of an individual.

Jordan Peterson has mentioned on a few occasions how people he works with are doing things like putting the entirety of Christian texts into LLMs in order to ask the machine questions. Peterson Academy will do something similar, putting entire courses into an LLM so that students can "ask the course" a question. Dr. Peterson has even had all of his writings put into an LLM so that he could essentially ask himself a question. His glowing reviews of this budding technology has made me incredibly curious.

Could a Large Language Model be used to generate course material that is customised to an individual student? Could it take into account their strengths and weaknesses? Could it recommend further study on matters the student may require to successfully complete a course? This would be an incredibly interesting area of study, but it's a bit beyond my abilities at the moment. Before getting into something like this, it might be better to start testing LLMs with personalised data.

Dabbling with ChatGPT

Looking at the hardware requirements to run a Large Language Model system locally, I would need to invest about $3,500 at a minimum into a workstation. Several avenues were explored to reduce costs, including starting with a used system that could be upgraded in sections. Unfortunately, the cost always worked out to somewhere in the vicinity of $3,500 to $4,000 in the first year, not including the cost of electricity and network bandwidth. This is several thousand dollars more than I am willing to invest at this time, so a cheaper option was required.

Fortunately, OpenAI has a $20/month offering for a fully-featured ChatGPT account. Last night I created an account and started playing around by asking the machine questions, hoping it would provide answers that I already knew3. In each case the response was correct and, in one instance, the answer included information that I had never heard before. Within five minutes I discovered two other sources that corroborated the new information and was impressed because ChatGPT taught me something new while I was testing its ability to understand my words.

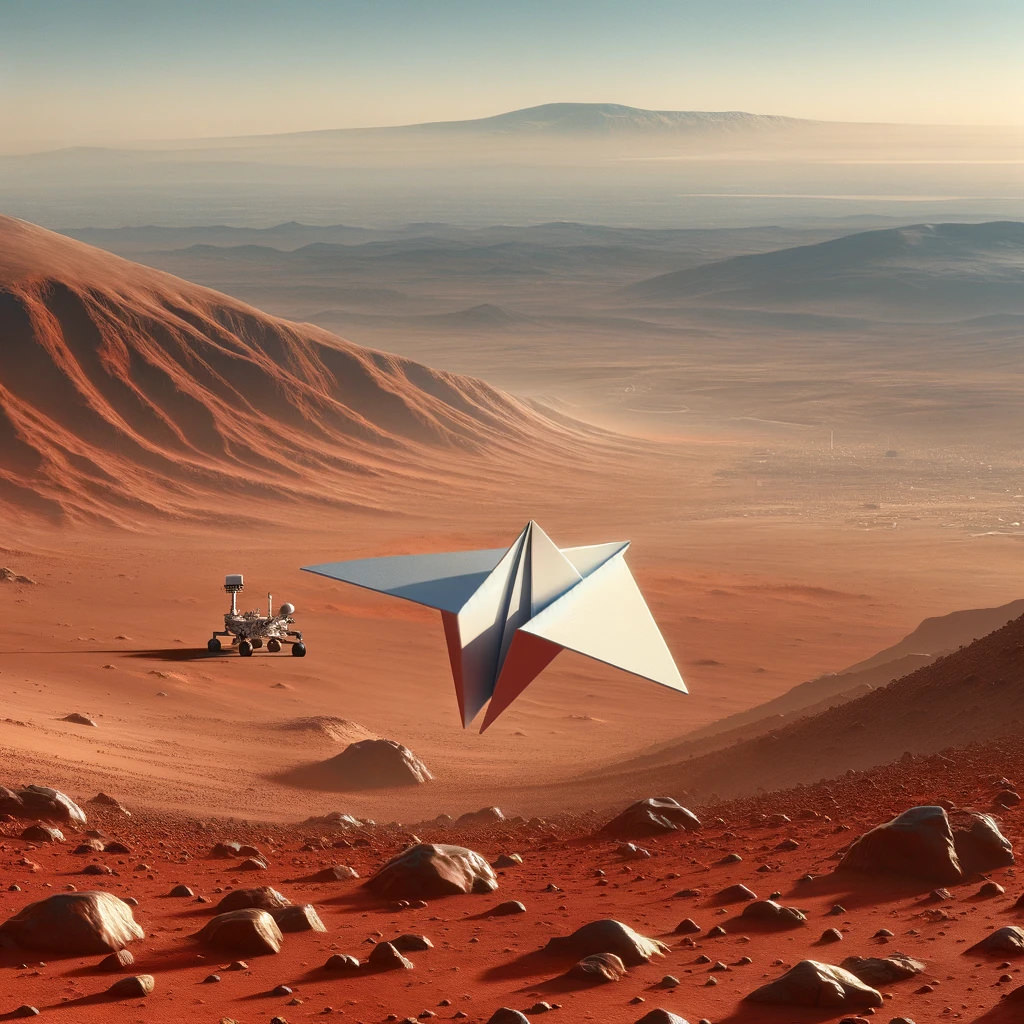

Next I wanted to see how well the DALL-E image generator worked. The first request was "Show me a paper airplane flying over the surface of Mars with the Curiosity rover in the distance". This is what I received back:

I then uploaded a picture of Ayumi and asked "Show me a picture of Ayumi playing a game of Go against a cat". This is how DALL-E responded:

Very interesting.

But could it write something the same way I might? Would it know to use an excessive number of commas, ellipses, and relative clauses?

Today I tested this out by uploading my entire public blogging archive dating from 2006 to today, as well as every public social post I have ever written to Twitter, App.Net, and Nice.social. This amounts to almost 4,000 blog posts and over a quarter-million social posts. Replies and any post shorter than 100 characters was excluded, as they would lack any context to work with.

To test, I kept the request vague: "Can you write a blog post using my writing style?"

What came back was interesting:

Embracing the Unfamiliar: My Journey with Ayumi and the Art of Coding

In the ever-evolving landscape of life, I find myself continually drawn to the unfamiliar, seeking growth at the crossroads of personal adventure and technological exploration. This journey, accompanied by my faithful Shiba Inu, Ayumi, mirrors the unpredictable nature of coding - a realm where each challenge is a step towards mastering the art.

Ayumi, with her spirited demeanor, teaches me the value of curiosity and resilience. Our travels across Japan, from the bustling streets of Tokyo to the serene landscapes of Kyoto, are more than mere physical movements; they are a metaphor for navigating the complexities of coding interfaces, databases, and APIs. Each destination, much like every coding challenge, presents its own set of puzzles, demanding adaptability, patience, and an eagerness to learn.

The philosophy of coding, much like life itself, is an endless loop of learning and unlearning. It's about embracing the bugs and errors as stepping stones, not stumbling blocks. Ayumi's unwavering spirit in the face of new territories and her joy in discovery remind me of the satisfaction found in solving a particularly stubborn bug or deploying a feature that perfectly serves its purpose.

Our adventures are more than just tales of a man and his dog; they are a narrative of the symbiosis between technology and human experience. As Ayumi explores new landscapes with fervor, I delve into the intricacies of coding, each of us on our own quest for understanding, yet together on a journey of mutual growth.

In this dance with the unknown, Ayumi and I find harmony. The challenges of the road and code, rather than daunting, become sources of inspiration. They remind us that at the heart of every adventure, whether it be through the forests of Japan or the virtual forests of code, lies the opportunity for discovery, learning, and, ultimately, a deeper connection with the world around us.

Lots of commas. Relative clauses galore. Even a pair of semi-colons. The only thing missing was an ellipsis in the first or second paragraph. The intertwining of disparate ideas in a semi-unresolved manner also seems to capture some of my longer-form blog posts from years past where I tried – and failed – to hone the craft of writing thoughtful articles exploring a philosophy that I could not yet lay down in words.

Absolutely remarkable.

Bringing this whole thing back to the idea of using LLMs with personalised education, using a tool with this sort of perspicacity would make it possible to construct personalities for one or more "teachers" who could interact as realistic avatars with people studying a topic. While this would not eliminate the isolation a person feels when learning something on their own, it could drastically reduce the need to find secondary or tertiary sources for answers. An LLM with an entire library of materials on astrophysics, for example, could answer questions from students in the persona of the teacher leading the course or – if such things were permitted – through the voice and mannerisms of Stephen Hawking, Michio Kaku, Neil deGrasse Tyson, or Edna Krabappel. This could drastically improve "engagement" in a learning environment; something that is often a challenge with students who are easily distracted or bored.

At the moment, no artificial teacher can replace a real human who is invested in teaching people a subject they are passionate about. We still have a long way to go before technology can completely replace the benefits of learning from physically present educators. However, a well-trained digital assistant could go a long way to reducing the workload of a teacher or professor, answering the simpler questions or repeating the same points for students who need the repetition. This would allow digital systems to be where skills and knowledge are acquired so that classrooms can be dedicated to the application of the skills and knowledge.

A pie in the sky dream? Perhaps. But we are a lot closer to this being a reality than a lot of people might realise.

Yes, there are free offerings out there, but these services generally offer the previous version of their software or an incredibly limited subset of functions. When exploring a tool with as much press coverage and potential as an LLM, it's generally better to invest some resources in order to build a better understanding of what potential may exist.

I do have a decent computer ... I think. It's just not decent enough to run LLMs with any sort of velocity. A single question can take upwards of 30 ~ 45 minutes to answer. Maybe one day I'll invest in a dedicated machine for this purpose ... but not for a long while.

Do not blindly believe anything found on the Internet.